📡 My technology > your technology

Thoughts on why the Online Safety Bill is probably a pile of trash, constructed from other trash

Hello there, children of 56kb internet. This week my body decided that I don’t deserve sleep, so naturally that led me to think that YOU don’t deserve this newsletter. But I couldn’t help myself. I read one STUPID story about the STUPID Online Safety Bill and I couldn’t ignore it. So this week’s issue will focus only on that. For a more traditional Horrific/Terrific, read last week’s.

This bill has been floating around like a putrid fart for years now. I remember reading the online harms white paper in 2019 — and then nearly a year ago, I wrote about the bill in this very newsletter. There are key ‘changes’ to the bill that the UK news seems to have only just picked up — but these were already being discussed last March. So maybe there’s been a lot of flip-flopping, but very little has changed:

The language around content which is legal but harmful is still present but is just less explicit

Prison time for tech execs instead of fining the companies was discussed a year ago, and is still being discussed now

The vague rules and even vaguer modes of enforcement still only apply to ‘the biggest and most popular’ online services who host user-generated content

The vapid career politicians who threw this bill together over what I’m guessing was a four-hour lunch break have, unsurprisingly, a rudimentary understanding of how online social spaces operate. So, there are three really Annoying Things enmeshed in this bill that we should probably take the time to examine. Those are:

Lack of nuance around what constitutes ‘social media’

Just like… literally having no idea what free speech is, and therefore inadvertently restricting it with the power of ignorance

The dangerous assumption that the answer to ‘bad technology’ is adding more technology

Okay let’s goooooooo….

☝️ Social media is more than just Instagram and TikTok

This bill manages to be vague enough that you have no idea what it’s really going to do, but also narrow enough in scope that whatever it does do, it either won’t be enough, or it will be too much in one place. I’m a GOOD writer so no, that sentence WASN’T too long, actually.

The companies affected will be ones who host user-generated content, who are also ‘the biggest and most popular’. There is no mention of how they will measure what is ‘big’ and what is ‘popular’. Surely with social media ‘big’ is ‘popular’ — because the amount of users on a platform is pretty much the only way to understand its size.

Anyway, even if you disregard all that, you’re still left with a cack-handed interpretation of ‘social media’. The phrase ‘social media’ is becoming less relevant with every passing week. These days online social spaces are extremely varied; some are buried deep within the tightest sub-fandom niche, and others appeal to nearly everyone and extremely accessible. Either way, I don’t think the UK government are aware of anything beyond traditional newsfeed platforms.

What about Minecraft? Apex Legends? Second Life? VR Chat? Or Roblox? Ah yes, good old Roblox — a gaming platform designed for children, with almost no protection against bullying, or adults who exploit them and steal their money. Just look at this tweet thread where a mother describes the kinds of ‘games’ you can find on Roblox, and what happens in them. One of them is called ‘public toilet simulator’???

Wikipedia also runs on user-generated content — do the rules apply to them? What about people who run Discord servers? Or Mastodon? If they ‘fail to protect children from harmful content’ will server-owners be sent to prison?? What happens to the Wikipedia pages that outline school shootings and other horrific things that probably count as ‘legal but harmful’?? Will the regulator just decide? That leads us to the next annoying thing…

✌️ The bill claims to protect free speech, but will probably degrade it

So, according to the fact sheet, Ofcom (the regulator) will “be able to make companies change their behaviour” and “help companies to comply with the new laws by publishing codes of practice, setting out the steps a company should take to comply with their new duties”.

⚡ Shocking: this is not a good way to protect free speech. True free speech would not have some central authority (Ofcom) dictating a code of practice to online platforms, or force the platforms to change their behaviour. I’m not even saying these things are a bad idea necessarily — the rules that Ofcom come up with might be great (lmao… doubt). So why paint this as a positive step towards preserving free speech? The bill should have probably never mentioned free speech at all. You can’t sit there and demand to radically change the way a website like Wikipedia works ‘in the name of free speech’. But, of course, I don’t think they even thought about Wikipedia when they sat in the pub while their under-paid assistants came up with these rules, did they?

The bill definitely part of the current trend of new laws that propose to protect the free speech of individuals, but really don’t. They also seem to work hard to actively restrict the free speech of platforms themselves. Any rules that say ‘platforms have to do xyz with their content’ limits the platform’s freedom of expression — because it means they don’t even get to create their own community guidelines.

What about places like 4chan or Kiwi Farms? These platforms are maybe too small to be in scope (but who the fuck knows — they are yet to clarify the scope!). But why should size be the only thing we go by?? 4chan may be ‘smaller’ than Facebook, but because of the nature of the user base, certainly has potential to inflict serious harm. If they were organised enough to invent Rick-Rolling, they can probably do anything… that’s all I’m saying.

🤟 Finally, the ultimate lawmaker’s fix: if technology is bad, add more technology

Right, I’m just going to say it: the solution to eliminating child sexual abuse materials (CSAM) online is not to spy on people — it’s just not. This is a social issue which requires approaches that sit outside of online platforms, and the tech industry at large. Social media and/or the internet is not the root cause of child p*rn. We all know this, and yet, the proposed solutions are always something like:

“Ofcom will be able to require a platform to use highly accurate technology to scan public and private channels for child sexual abuse material. The use of this power will be subject to strict safeguards to protect users’ privacy. Highly accurate automated tools will ensure that legal content is not affected”

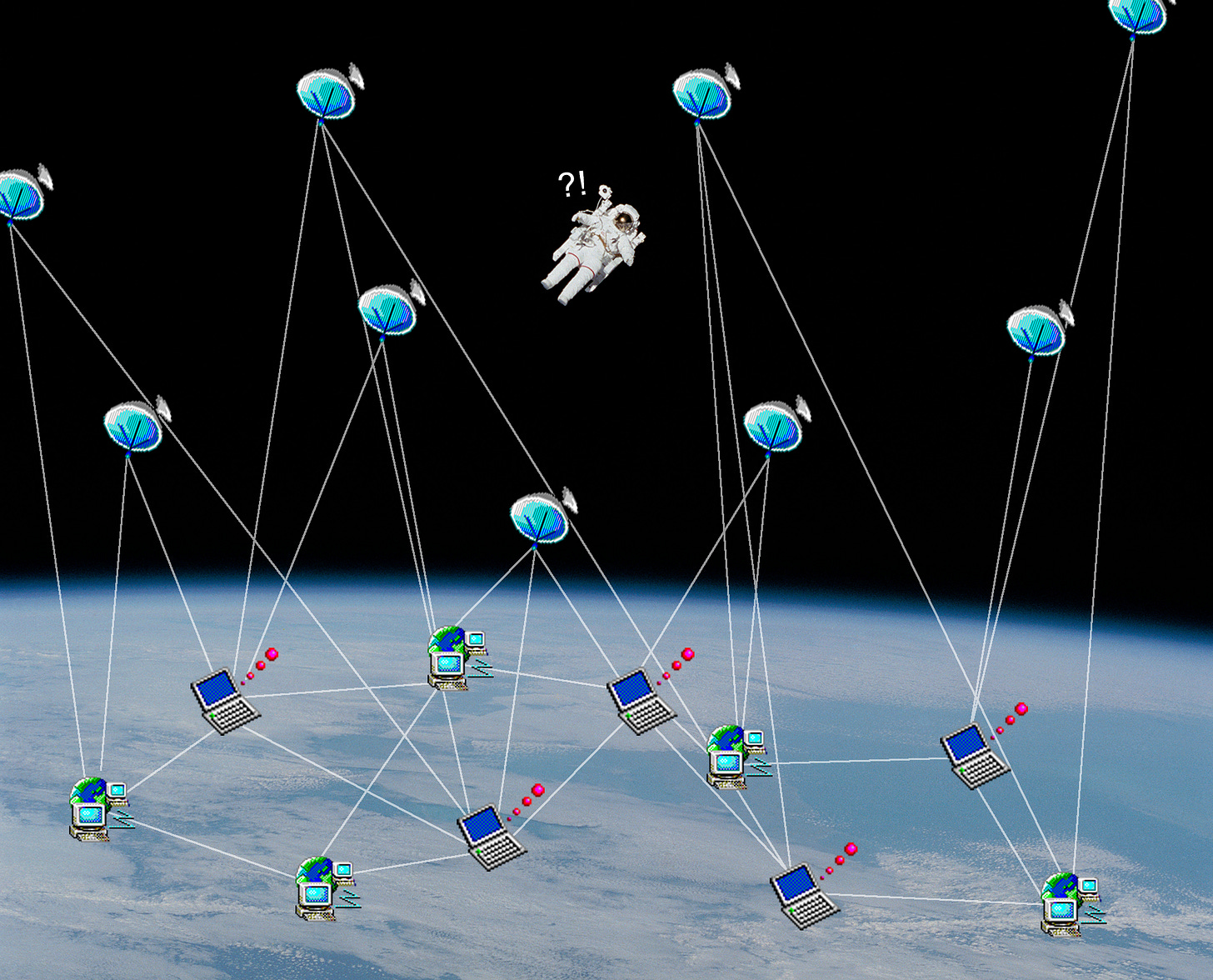

💆♀️ I’m just… so tired of reading things like this. There’s no such thing as ‘highly accurate automated tools’; said tools will probably catch loads of false positives, invade user privacy, and cause loads of general upset. No government should have any sort of hand in our private interactions — just because they are happening online, it doesn’t mean they should be vulnerable to government scrutiny. This is a classic case of using shoddy technology to counter the effects of other shoddy technology — when really, technology is not the problem at all.

This bill is as clear and persuasive as my CV was when I was 21. They should probably throw it out and start again. Oh and… get some experience working behind the counter of a high street chemist.

Thank you for reading. I literally threw this together in one afternoon so I hope it made sense.